1. Abstract

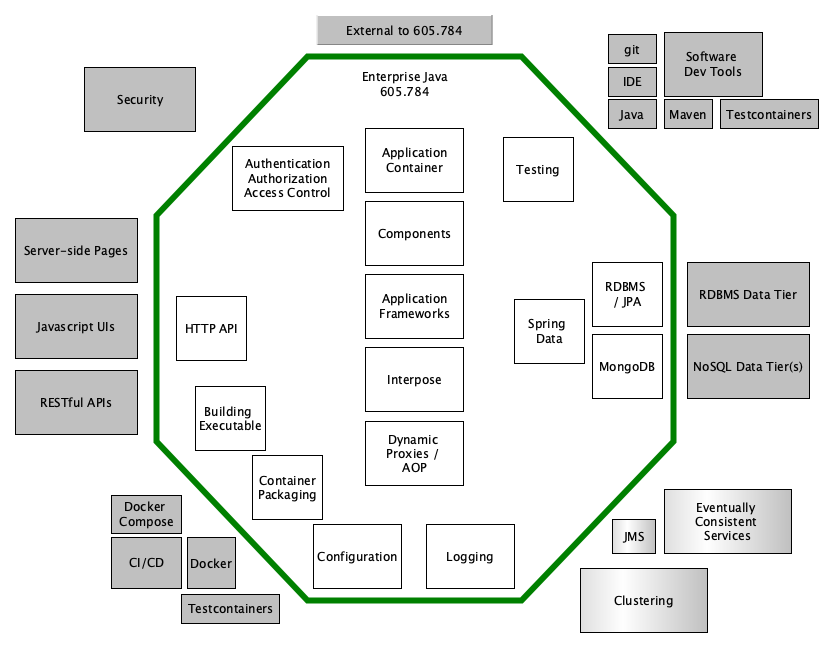

This book contains course notes covering Enterprise Computing with Java. This comprehensive course explores core application aspects for developing, configuring, securing, deploying, and testing a Java-based service using a layered set of modern frameworks and libraries that can be used to develop full services and microservices to be deployed within a container. The emphasis of this course is on the center of the application (e.g., Spring, Spring Boot, Spring Data, and Spring Security) and will lay the foundation for other aspects (e.g., API, SQL and NoSQL data tiers, distributed services) covered in related courses.

Students will learn thru lecture, examples, and hands-on experience in building multi-tier enterprise services using a configurable set of server-side technologies.

Students will learn to:

-

Implement flexibly configured components and integrate them into different applications using inversion of control, injection, and numerous configuration and auto-configuration techniques

-

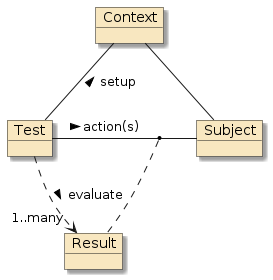

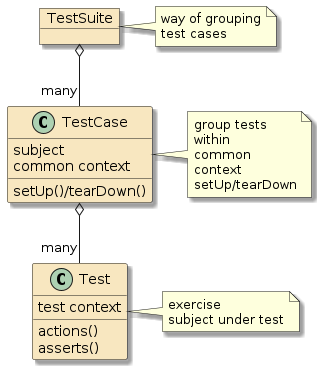

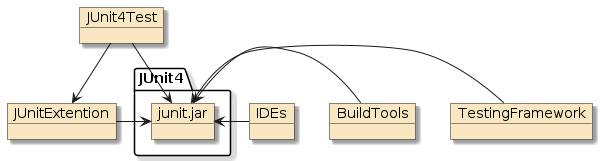

Implement unit and integration tests to demonstrate and verify the capabilities of their applications using JUnit and Spock

-

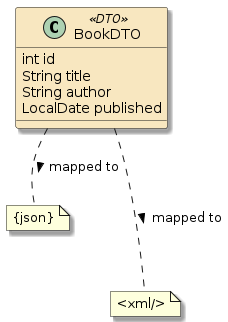

Implement basic API access to service logic using using modern RESTful approaches that include JSON and XML

-

Implement basic data access tiers to relational and NoSQL databases using the Spring Data framework

-

Implement security mechanisms to control access to deployed applications using the Spring Security framework

Using modern development tools students will design and implement several significant programming projects using the above-mentioned technologies and deploy them to an environment that they will manage.

The course is continually updated and currently based on Java 11, Spring 5.x, and Spring Boot 2.x.

Enterprise Computing with Java (605.784.8VL) Course Syllabus

copyright © 2026 jim stafford (jim.stafford@jhu.edu)

2. Course Description

2.1. Meeting Times/Location

-

Wednesdays, 4:30-7:10pm EST

-

onsite: APL K219

-

via Zoom Meeting ID: 931 3887 6892

-

2.2. Course Goal

The goal of this course is to master the design and development challenges of a single application instance to be deployed in an enterprise-ready Java application framework. This course provides the bedrock for materializing broader architectural solutions within the body of a single instance.

2.3. Description

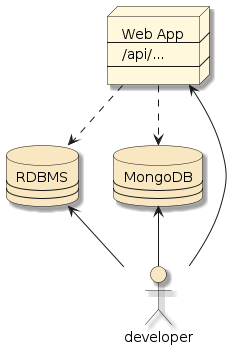

This comprehensive course explores core application aspects for developing, configuring, securing, deploying, and testing a Java-based service using a layered set of modern frameworks and libraries that can be used to develop full services and microservices to be deployed within a container. The emphasis of this course is on the center of the application (e.g., Spring, Spring Boot, Spring Data, and Spring Security) and will lay the foundation for other aspects (e.g., API, SQL and NoSQL data tiers, distributed services) covered in related courses.

Students will learn thru lecture, examples, and hands-on experience in building multi-tier enterprise services using a configurable set of server-side technologies.

Students will learn to:

-

Implement flexibly configured components and integrate them into different applications using inversion of control, injection, and numerous configuration and auto-configuration techniques

-

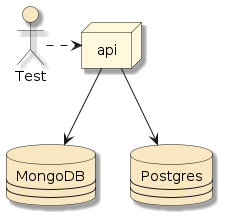

Implement unit and integration tests to demonstrate and verify the capabilities of their applications using JUnit

-

Implement API access to service logic using using modern RESTful approaches that include JSON and XML

-

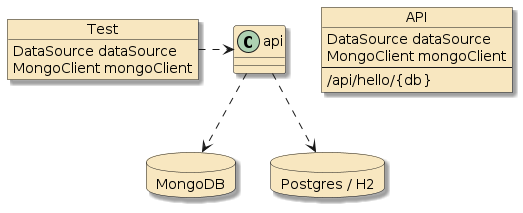

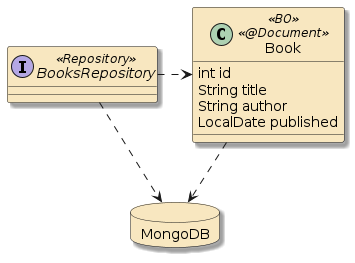

Implement data access tiers to relational and NoSQL (MongoDB) databases using the Spring Data framework

-

Implement security mechanisms to control access to deployed applications using the Spring Security framework

-

Package, run, and test services within a Docker container

Using modern development tools students will design and implement several significant programming projects using the above-mentioned technologies in an environment that they will manage.

The course is continually updated and currently based on Java 21, Maven 3, Spring 6.x, and Spring Boot 3.x.

2.4. Student Background

-

Prerequisite: 605.481 Distributed Development on the World Wide Web or equivalent

-

Strong Java programming skills are assumed

-

Familiarity with Maven and IDEs is helpful

-

Familiarity with Docker (as a user) can be helpful in setting up a local development environment quickly

2.5. Student Commitment

-

Students should be prepared to spend between 6-10 hours a week outside of class. Time spent can be made efficient by proactively keeping up with class topics and actively collaborating with the instructor and other students in the course.

2.6. Course Text(s)

The course uses no mandatory text. The course comes with many examples, course notes for each topic, and references to other free Internet resources.

2.7. Required Software

Students are required to establish a local development environment.

-

Software you will need to load onto your local development environment:

-

Git Client

-

Java JDK 21

-

Maven 3 (>= 3.6.3)

-

IDE (IntelliJ IDEA Community Edition or Pro or Eclipse/STS)

-

The instructor will be using IntelliJ IDEA CE in class, but Eclipse/STS is also a good IDE option. It is best to use what you are already comfortable using.

-

-

JHU VPN (Open Pulse Secure) — workarounds are available

-

-

Software you will ideally load onto your local development environment:

-

Docker

-

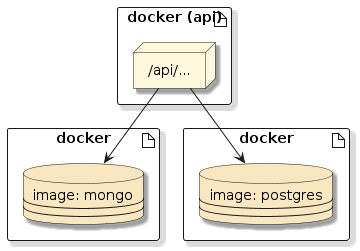

Docker can be used to automate software installation and setup and implement deployment and integration testing techniques. Several pre-defined images, ready to launch, will be made available in class.

-

-

curl or something similar

-

Postman API Client or something similar

-

-

Software you will need to install if you do not have Docker

-

MongoDB

-

-

High visibility software you will use that will get downloaded and automatically used through Maven.

-

application framework (Spring Boot, Spring).

-

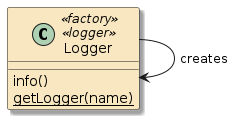

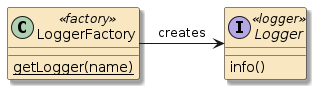

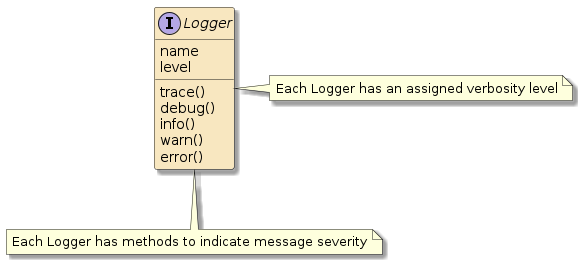

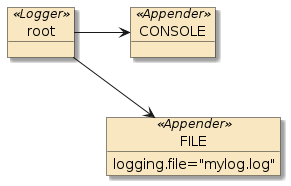

SLF/Logback

-

a relational database (H2 Database Engine) and JPA persistence provider (Hibernate)

-

JUnit

-

Testcontainers

-

2.8. Course Structure

Lectures are conducted live each week and reflect recent/ongoing student activity. Students may optionally attend the lecture live at JHU, online, and/or watch the recording based on their personal schedule and review. There is no required attendance for the live lecture. Emphasis will be made to make the recorded video a valuable asset in all cases.

The course materials consist of a large set of examples that you will download, build, and work with locally. The course also provides a set of detailed course notes for each lecture and an associated assignment active at all times during the semester. Topics and assignments have been grouped into application development, service/API tier, containers, and data tier. Each group consists of multiple topics that span one or more weeks.

The examples are available in a Gitlab public repository. The course notes are available in HTML and PDF format for download. All content or links to content is published on the course public website (https://jcs.ep.jhu.edu/ejava-springboot/). To help you locate and focus on current content and not be overwhelmed with the entire semester, examples and links to content are activated as the semester progresses. A list of "What is new" and "Student TODOs" is published weekly before class to help you keep up to date and locate relevant material. The complete set of content from the previous semester is always available from the legacy link (https://jcs.ep.jhu.edu/legacy-ejava-springboot/)

2.9. Grading

-

100 >= A >= 90 > B >= 80 > C >= 70 > F

Assessment |

% of Semester Grade |

Class/Newsgroup Participation |

10% (9pm EST, Wed weekly cut-off) |

Assignment 0: Application Build |

5% (##) |

Assignment 1: Application Config |

20% |

Assignment 2: Web API |

15% |

Assignment 3: Security |

15% |

Assignment 4: Integration Testing and Containers |

10% |

Assignment 5: Database |

25% |

|

Do not host your course assignments in a public Internet repository.

Course assignments should not be posted in a public Internet repository. If using an Internet repository, only the instructor should have access. |

-

Assignments will be done individually and most are graded 100 though 0, based on posted project grading criteria.

-

## Assignment 0 will be graded on a done (100)/not-done(0) basis and must be turned in on-time in order to qualify for a REDO. The intent of this requirement is to promote early activity with development and early exchange of questions/answers and artifacts between students and instructor.

-

-

Class/newsgroup participation will be based on instructor judgment whether the student has made a contribution to class to either the classroom or newsgroup on a consistent weekly basis. A newsgroup contribution may be a well-formed technical observation/lesson learned, a well formed question that leads to a well formed follow up from another student, or a well formed answer/follow-up to another student’s question. Well formed submissions are those that clearly summarize the topic in the subject, and clearly describe the objective, environment, and conditions in the body. The instructor will be the judge of whether a newsgroup contribution meets the minimum requirements for the week. The intent of this requirement is to promote active and open collaboration between class members.

-

Weekly cut-off for newsgroup contributions is each Wed @9pm EST

-

2.10. Grading Policy

-

Late assignments will be deducted 10pts/week late, starting after the due date/time, with a one-time exception. A student may submit a single project up to 4 days late without receiving approval and still receive complete credit. Students taking advantage of the "free first pass" should still submit an e-mail to the instructor and grader(s) notifying them of their intent.

-

Class attendance is strongly recommended, but not mandatory. The student is responsible for obtaining any written or oral information covered during their absence. Each session will be recorded. A link to the recording will be posted on Canvas.

2.11. Academic Integrity

Collaboration of ideas and approaches are strongly encouraged. You may use partial solutions provided by others as a part of your project submission. However, the bulk usage of another students implementation or project will result in a 0 for the project. There is a difference between sharing ideas/code snippets and turning in someone else’s work as your own. When in doubt, document your sources.

Do not host your course assignments in a public Internet repository.

2.12. Instructor Availability

I am available at least 20min before class, breaks, and most times after class for extra discussion. I monitor/respond to e-mails and the newsgroup discussions and hold ad-hoc office hours via Zoom in the evening and early morning hours (EST) plus weekends.

I provide detailed answers to assignment and technical questions through the course newsgroup. You can get individual, non-technical questions answered via the instructor email.

2.13. Communication Policy

I provide detailed answers to assignment and technical questions through the course newsgroup. You can get individual, non-technical questions answered via email but please direct all technical and assignment questions to the newsgroup. If you have a question or make a discovery — it is likely pertinent to most of the class and you are the first to identify.

-

Newsgroup: [Canvas Course Discussions]

-

Instructor Email: jim.stafford@jhu.edu

I typically respond to all e-mails and newsgroup posts in the evening and early morning hours (EST). Rarely will a response take longer than 24 hours. It is very common for me to ask for a copy of your broken project so that I can provide more analysis and precise feedback. This is commonly transmitted as an early submission in Canvas.

2.14. Office Hours

Students needing further assistance are welcome to schedule a web meeting using Zoom Conferencing. Most conference times will be between 8 and 10pm EST and 6am to 5pm EST weekends.

3. Course Assignments

3.1. General Requirements

-

Assignments must be submitted to Canvas with source code in a standard archive file. "target" directories with binaries are not needed and add unnecessary size.

-

All assignments must be submitted with a README that points out how the project meets the assignment requirements.

-

All assignments must be written to build and run in the grader’s environment in a portable manner using Maven 3. This will be clearly spelled out during the course and you may submit partial assignments early to get build portability feedback (not early content grading feedback).

-

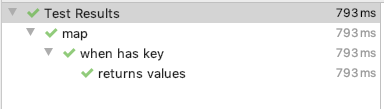

Test Cases must be written using JUnit 5 and run within the Maven surefire and failsafe environments.

-

The course repository will have an assignment-support and assignment-starter set of modules.

-

The assignment-support modules are to be referenced as a dependency and not cloned into student submissions.

-

The assignment-starter modules are skeletal examples of work to be performed in the submitted assignment.

-

3.2. Submission Guidelines

You should test your application prior to submission by

-

Verify that your project does not require a pre-populated database. All setup must come through automated test setup.

This will make sure you are not depending on any residue schema or data in your database.

-

Run maven clean and archive your project from the root without pre-build target directory files.

This will help assure you are only submitting source files and are including all necessary source files within the scope of the assignment.

-

Move your localRepository (or set your settings.xml#localRepository value to a new location — do not delete your primary localRepository)

This will hide any old module SNAPSHOTs that are no longer built by the source (e.g., GAV was changed in source but not sibling dependency).

-

Explode the archive in a new location and run mvn clean install from the root of your project.

This will make sure you do not have dependencies on older versions of your modules or manually installed artifacts. This, of course, will download all project dependencies and help verify that the project will build in other environments. This will also simulate what the grader will see when they initially work with your project.

-

Make sure the README documents all information required to demonstrate or navigate your application and point out issues that would be important for the evaluator to know (e.g., "the instructor said…")

4. Syllabus

| # | Date | Lectures | Assignments/Notes |

|---|---|---|---|

Aug27 |

|

|

|

|

|||

Sep03 |

|||

Sep10 |

|

||

Logging notes |

|||

Sep17 |

|

| # | Date | Lectures | Assignments/Notes |

|---|---|---|---|

Sep24 |

|||

Oct01 *Async |

|||

Oct08 |

|

||

Oct15 |

|

||

Oct22 |

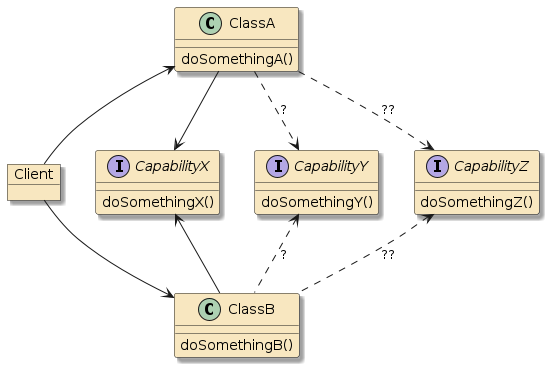

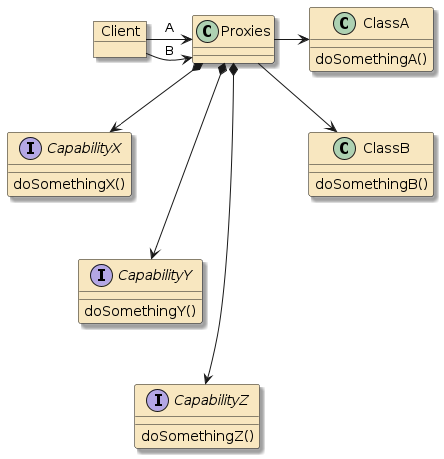

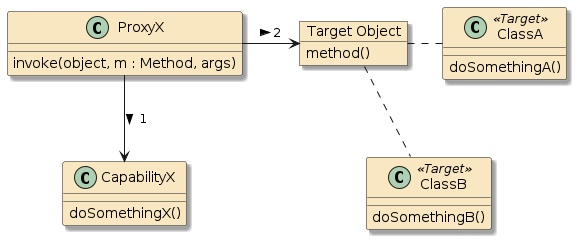

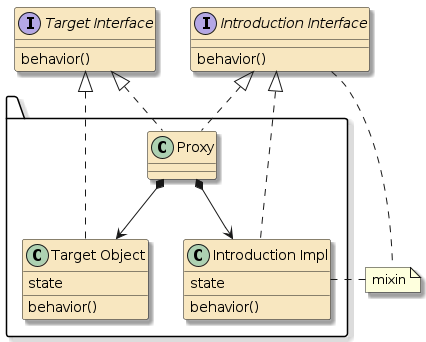

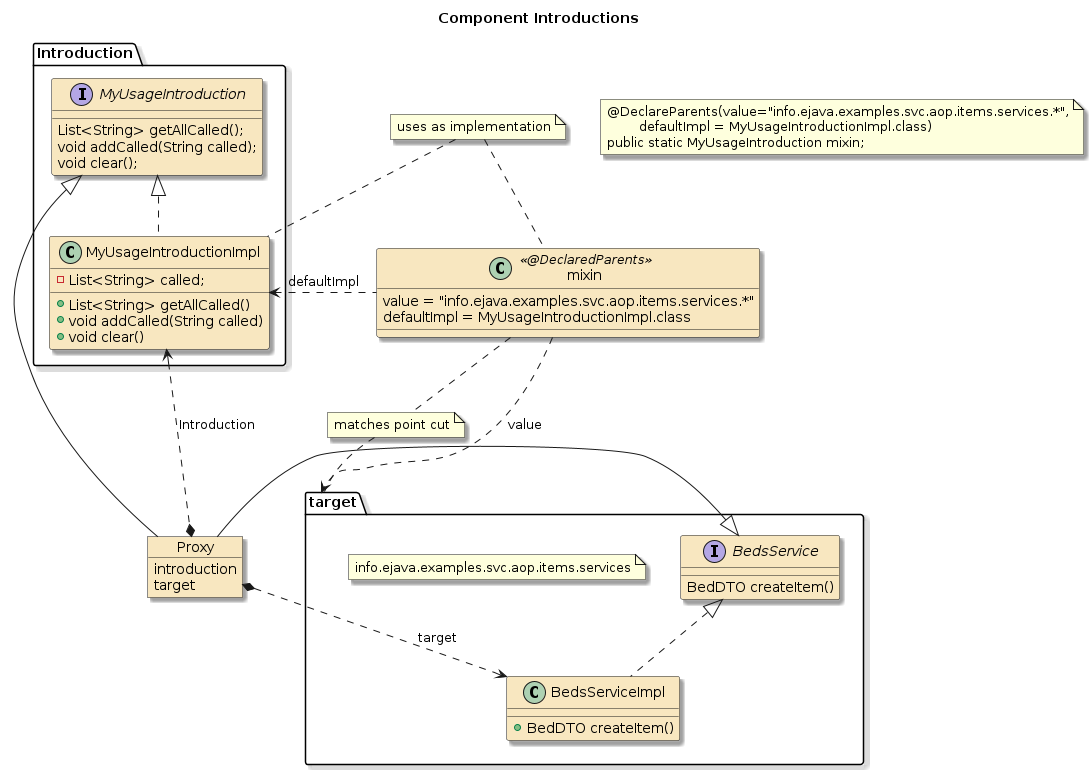

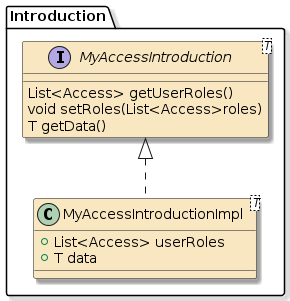

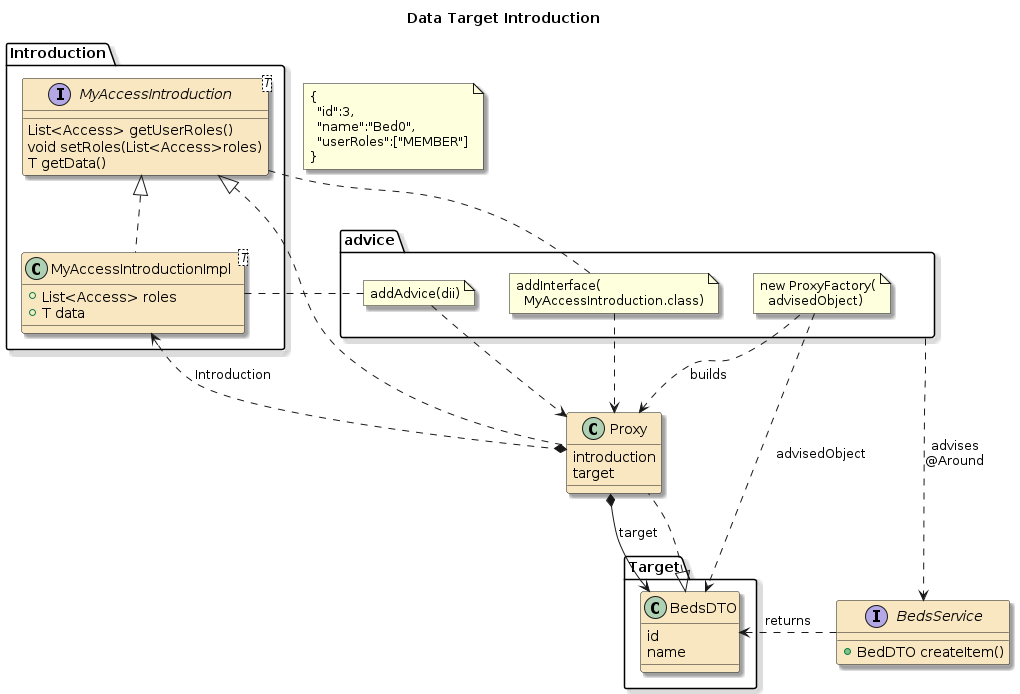

AOP and Method Proxies notes |

|

| # | Date | Lectures | Assignments/Notes |

|---|---|---|---|

Oct29 |

|||

Nov05 |

|||

Nov12 |

|||

Nov19 |

|||

Nov26 |

Thanksgiving |

no class |

|

Dec03 |

Validation notes |

Development Environment Setup

copyright © 2026 jim stafford (jim.stafford@jhu.edu)

5. Introduction

Participation in this course requires a local development environment. Since competence using Java is a prerequisite for taking the course, much of the content here is likely already installed in your environment.

Software versions do not have to be latest-and-greatest. For the most part, the firm requirement is that

-

your JDK must be at least Java 21

-

the code you submit must be compliant with Java 21 environments

You must manually download and install some of the software locally (e.g., IDE). Some software installations (e.g., MongoDB) have simple Docker options. The remaining set will download automatically and run within Maven. Some software is needed day 1. Others can wait.

Rather than repeat detailed software installation procedures for the various environments, I will list each one, describe its purpose in the class, and direct you to one or more options to obtain. Please make use of the course newsgroup if you run into trouble or have questions.

5.1. Goals

The student will:

-

setup required tooling in local development environment and be ready to work with the course examples

5.2. Objectives

At the conclusion of these instructions, the student will have:

-

installed Java JDK 21

-

installed Maven 3

-

installed a Git Client and checked out the course examples repository

-

installed a Java IDE (IntelliJ IDEA Community Edition, Eclipse Enterprise, or Eclipse/STS)

-

installed a Web API Client tool

-

optionally installed Docker

-

conditionally installed Mongo

6. Software Setup

6.1. Java JDK (immediately)

You will need a JDK 21 compiler and its accompanying JRE environment immediately in class. Everything we do will revolve around a JVM.

-

For Mac and Unix-like platforms, SDKMan is a good source for many of the modern JDK images.

$ sdk list java | grep 21 | grep ora

| >>> | 21.0.8 | oracle | installed | 21.0.8-oracle

| | 21.0.7 | oracle | | 21.0.7-oracle

| | 21.0.6 | oracle | | 21.0.6-oracle

...$ sdk install java 21.0.8-oracle

After installing and placing the bin directory in your PATH, you should be able to execute the following commands and output a version 21.x of the JRE and compiler.

$ java --version java 21.0.8 2025-07-15 LTS Java(TM) SE Runtime Environment (build 21.0.8+12-LTS-250) Java HotSpot(TM) 64-Bit Server VM (build 21.0.8+12-LTS-250, mixed mode, sharing) $ javac --version javac 21.0.8

6.2. Git Client (immediately)

You will need a Git client immediately in class. Note that most IDEs have a built-in/internal Git client capability, so the command line client shown here may not be absolutely necessary. If you chose to use your built-in IDE Git client, just translate any command-line instructions to GUI commands. If you have git already installed, it is highly unlikely you need to upgrade.

Download and install a Git Client.

-

All platforms - Git-SCM

|

I have git installed through brew on macOS and recently updated using the following $ brew upgrade git |

| If you already have git installed and working — no need for upgrade to latest. |

$ git version git version 2.51.0

Checkout the course baseline.

$ git clone https://gitlab.com/ejava-javaee/ejava-springboot.git ... $ ls | sort ... assignment-starter assignment-support build common ... pom.xml

Each week you will want to update your copy of the examples as I updated and release changes.

$ git checkout main # switches to main branch $ git pull # merges in changes from origin

|

Updating Changes to Modified Directory

If you have modified the source tree, you can save your changes to a new branch using the following: $ git status #show me which files I changed $ git diff #show me what the changes were $ git checkout -b new-branch #create new branch $ git commit -am "saving my stuff" #commit my changes to new branch $ git checkout main #switch back to course baseline $ git pull #get latest course examples/corrections |

|

Saving Modifications to an Existing Branch

If you have made modifications to the source tree while in the wrong branch, you can save your changes in an existing branch using the following: $ git stash #save my changes in a temporary area $ git checkout existing-branch #go to existing branch $ git commit -am "saving my stuff" #commit my changes to existing branch $ git checkout main #switch back to course baseline $ git pull #get latest course examples/corrections |

6.3. Maven 3 (immediately)

You will need Maven immediately in class. We use Maven to create repeatable and portable builds in class. This software build system is rivaled by Gradle. However, everything presented in this course is based on Maven and there is no feasible way to make that optional.

Download and install Maven 3.

-

All platforms - Apache Maven Project

Place the $MAVEN_HOME/bin directory in your $PATH so that the mvn command can be found.

$ mvn --version Apache Maven 3.9.11 (3e54c93a704957b63ee3494413a2b544fd3d825b) Maven home: /usr/local/Cellar/maven/3.9.11/libexec Java version: 21.0.8, vendor: Oracle Corporation, runtime: .../.sdkman/candidates/java/21.0.8-oracle Default locale: en_US, platform encoding: UTF-8 OS name: "mac os x", version: "15.6.1", arch: "aarch64", family: "mac"

Setup any custom settings in $HOME/.m2/settings.xml.

This is an area where you and I can define environment-specific values referenced by the build.

<?xml version="1.0"?>

<settings xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/settings-1.0.0.xsd">

<!-- keep as default

<localRepository>somewhere_else</localRepository>

-->

<offline>false</offline>

<mirrors>

<!-- uncomment when JHU VPN unavailable

<mirror>

<id>ejava-nexus</id>

<mirrorOf>ejava-nexus,ejava-nexus-snapshots,ejava-nexus-releases</mirrorOf>

<url>file://${user.home}/.m2/repository/</url>

</mirror>

--> (1) (2)

</mirrors>

<profiles>

</profiles>

<activeProfiles>

<!-- activate Docker modules when published

<activeProfile>docker</activeProfile>

-->

</activeProfiles>

</settings>| 1 | make sure your ejava-springboot repository:main branch is up to date and installed (i.e., mvn clean install -f ./build; mvn clean install) prior to using local mirror |

| 2 | the URL in the mirror must be consistent with the localRepository value.

The value shown here assumes the default, $HOME/.m2/repository value. |

Attempt to build the source tree. Report any issues to the course newsgroup.

$ pwd .../ejava-springboot $ mvn install -f build ... [INFO] ---------------------------------------- [INFO] BUILD SUCCESS [INFO] ---------------------------------------- $ mvn clean install ... [INFO] ---------------------------------------- [INFO] BUILD SUCCESS [INFO] ----------------------------------------

6.4. Java IDE (immediately)

You will realistically need a Java IDE very early in class.

If you are a die-hard vi, emacs, or text editor user — you can do a lot with your current toolset and Maven.

However, when it comes to code refactoring, inspecting framework API classes, and debugging, there is no substitute for a good IDE.

I have used Eclipse/STS for many years and some students in previous semester have used Eclipse installations from a previous Java-development course or work experience.

They are free and work well.

I will actively be using IntelliJ IDEA Community Edition.

The community edition is free and contains most of the needed support.

The professional edition is available free for 1 year (plus renewals) to anyone supplying a .edu e-mail.

| It is up to you what IDE you use. Using something familiar is always the best first choice. |

Download and install an IDE for Java development.

-

IntelliJ IDEA Community Edition

-

All platforms - Jetbrains IntelliJ

-

-

Eclipse/STS

-

All platforms - Spring.io /tools

-

-

Eclipse/Enterprise Java and Web Developers (or whatever…)

-

All platforms - eclipse.org

-

Load an attempt to run the examples in

-

app/app-build/java-app-example

6.5. Web API Client tool (not immediately)

Within the first month of the course, it will be helpful for you to have a client that can issue POST, PUT, and DELETE commands in addition to GET commands over HTTP. This will not be necessary until a few weeks into the semester.

Some options include:

-

curl - command line tool popular in Unix environments and likely available for Windows. All of my Web API call examples are done using curl.

-

Postman API Client - a UI-based tool for issuing and viewing web requests/responses. I personally do not like how "enterprisey" Postman has become. It used to be a simple browser plugin tool. However, the free version works and seems to only require a sign-up login.

$ curl -v -X GET https://ep.jhu.edu/

<!DOCTYPE html>

<html class="no-js" lang="en">

<head>

...

<title>Johns Hopkins Engineering | Part-Time & Online Graduate Education</title>

...6.6. Install Docker (not immediately)

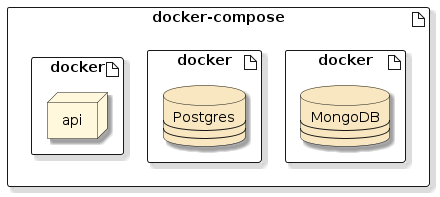

We will cover and make use of Docker since it is highly likely that anything you develop professionally will be deployed with Docker or will leverage it in some way. Spring/Spring Boot has also embraced Docker and Testcontainers as their preferred integration environment. There will be time-savings setup of backend databases as well as options in assignments where Docker desired. Once the initial investment of installing Docker has been tackled — software deployments, installation, and executions become very portable and easy to achieve.

| I have made Docker optional for the students who cannot install. Let me know where you stand on this installation. |

Docker can serve three purposes in class:

-

automates example database and JMS resource setup

-

provides a popular example deployment packaging

-

provides an integration test platform option with Maven plugins or with Testcontainers

Without Docker installation, you will

-

need to manually install MongoDB native to your environment

-

be limited to conceptual coverage of deployment and testing options in class

Download and install Docker (called "Docker Desktop" these days).

-

All platforms - Docker.com

-

Optionally install - docker-compose

$ docker -v

Docker version 28.3.2, build 578ccf6

$ docker-compose -v

Docker Compose version v2.39.1-desktop.1

The Docker Compose capability is now included with Docker via a Docker plugin and executed using docker compose versus requiring a separate docker-compose wrapper.

Functionally they are the same.

The docker-compose script is only required for some legacy cases.

|

$ docker compose --help

Usage: docker compose [OPTIONS] COMMAND

Define and run multi-container applications with Docker

...

$ docker-compose --help

Usage: docker compose [OPTIONS] COMMAND

Define and run multi-container applications with Docker

...6.6.1. docker-compose Test Drive

With the course baseline checked out, you should be able to perform the following. Your results for the first execution will also include the download of images.

$ docker compose -p ejava up -d mongodb postgres (1)(2) Creating ejava_postgres_1 ... done Creating ejava_mongodb_1 ... done

| 1 | -p option sets the project name to a well-known value (directory name is default) |

| 2 | up starts services and -d runs them all in the background |

$ docker compose -p ejava stop mongodb postgres (1) Stopping ejava_mongodb_1 ... done Stopping ejava_postgres_1 ... done $ docker compose -p ejava rm -f mongodb postgres (2) Going to remove ejava_mongodb_1, ejava_postgres_1 Removing ejava_mongodb_1 ... done Removing ejava_postgres_1 ... done

| 1 | stop pauses the running container |

| 2 | rm removes state assigned to the stopped container. -f does not request confirmation. |

6.7. MongoDB (later)

You will need MongoDB in the later 1/3 of the course. It is somewhat easy to install locally, but a mindless snap — configured exactly the way we need it to be — if we use Docker. Feel free to activate a free Atlas account if you wish, but what gets turned in for grading should either use a standard local URL (using Flapdoodle (via Maven), Docker, or Testcontainers).

If you have not and will not be installing Docker, you will need to install and set up a local instance of Mongo.

-

All platforms - MongoDB

Introduction to Enterprise Java Frameworks

copyright © 2026 jim stafford (jim.stafford@jhu.edu)

7. Introduction

7.1. Goals

The student will learn:

-

constructs and styles for implementing code reuse

-

what is a framework

-

what has enabled frameworks

-

a historical look at Java frameworks

7.2. Objectives

At the conclusion of this lecture, the student will be able to:

-

identify the key difference between a library and framework

-

identify the purpose for a framework in solving an application solution

-

identify the key concepts that enable a framework

-

identify specific constructs that have enabled the advancement of frameworks

-

identify key Java frameworks that have evolved over the years

8. Code Reuse

Code reuse is the use of existing software to create new software. [1]

We leverage code reuse to help solve either repetitive or complex tasks so that we are not repeating ourselves, we reduce errors, and we achieve more complex goals.

8.1. Code Reuse Trade-offs

On the positive side, we do this because we have confidence that we can delegate a portion of our job to code that has been proven to work. We should not need to again test what we are using.

On the negative side, reuse can add dependencies bringing additional size, complexity, and risk to our solution. If all you need is a spoon — do you need to bring the entire kitchen?

8.2. Code Reuse Constructs

Code reuse can be performed using several structural techniques

- Method Call

-

We can wrap functional logic within a method within our own code base. We can make calls to this method from the places that require that same task performed.

- Classes

-

We can capture state and functional abstractions in a set of classes. This adds some modularity to related reusable method calls.

- Interfaces

-

Abstract interfaces can be defined as placeholders for things needed but supplied elsewhere. This could be because of different options provided or details being supplied elsewhere.

- Modules

-

Reusable constructs can be packaged into separate physical modules so that they can be flexibly used or not used by our application.

8.3. Code Reuse Styles

There are two basic styles of code reuse, and they primarily have to with control.

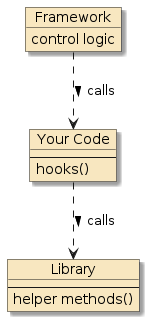

Figure 1. Library/ Framework/Code Relationship [2]

|

|

It’s not always a one-or-the-other style. Libraries can have mini frameworks within them. Even the JSON/XML parser example can be a mini-framework of customizations and extensions.

9. Frameworks

9.1. Framework Informal Description

A successful software framework is a body code that has been developed from the skeletons of successful and unsuccessful solutions of the past and present within a common domain of challenge. A framework is a generalization of solutions that provides for key abstractions, opportunity for specialization, and supplies default behavior to make the on-ramp easier and also appropriate for simpler solutions.

-

"We have done this before. This is what we need and this is how we do it."

A framework is much bigger than a pattern instantiation. A pattern is commonly at the level of specific object interactions. We typically have created or commanded something at the completion of a pattern — but we have a long way to go in order to complete our overall solution goal.

-

Pattern Completion: "that is not enough — we are not done"

-

Framework Completion: "I would pay (or get paid) for that!"

A successful framework is more than many patterns grouped together. Many patterns together is just a sea of calls — like a large city street at rush hour. There is a pattern of when people stop and go, make turns, speed up, or yield to let someone into traffic. Individual tasks are accomplished, but even if you could step back a bit — there is little to be understood by all the interactions.

-

"Where is everyone going?"

A framework normally has a complex purpose. We have typically accomplished something of significance or difficulty once we have harnessed a framework to perform a specific goal. Users of frameworks are commonly not alone. Similar accomplishments are achieved by others with similar challenges but varying requirements.

-

"This has gotten many to their target. You just need to supply …"

Well-designed and popular frameworks can operate at different scale — not just a one-size-fits-all all-of-the-time. This could be for different sized environments or simply for developers to have a workbench to learn with, demonstrate, or develop components for specific areas.

-

"Why does the map have to be actual size?"

9.2. Framework Characteristics

The following distinguishing features for a framework are listed on Wikipedia. [3] I will use them to structure some further explanations.

- Inversion of Control (IoC)

-

Unlike a procedural algorithm where our concrete code makes library calls to external components, a framework calls our code to do detailed things at certain points. All the complex but reusable logic has been abstracted into the framework.

-

"Don’t call us. We’ll call you." is a very common phrase to describe inversion of control

-

- Default Behavior

-

Users of the framework do not have to supply everything. One or more selectable defaults try to do the common, right thing.

-

Remember — the framework developers have solved this before and have harvested the key abstractions and processing from the skeletal remains of previous solutions

-

- Extensibility

-

To solve the concrete case, users of the framework must be able to provide specializations that are specific to their problem domain.

-

Framework developers — understanding the problem domain — have pre-identified which abstractions will need to be specialized by users. If they get that wrong, it is a sign of a bad framework.

-

- Non-modifiable Framework code

-

A framework has a tangible structure; well-known abstractions that perform well-defined responsibilities. That tangible aspect is visible in each of the concrete solutions and is what makes the product of a framework immediately understandable to other users of the framework.

-

"This is very familiar"

-

10. Framework Enablers

10.1. Dependency Injection

A process to enable Inversion of Control (IoC), whereby objects define their dependencies [4] and the manager assembles and connects the objects according to definitions.

The "manager" can be your setup code ("POJO" setup) or in realistic cases a "container" (see later definition)

10.2. POJO

A Plain Old Java Object (POJO) is what the name says it is. It is nothing more than an instantiated Java class.

A POJO normally will address the main purpose of the object and can be missing details or dependencies that give it complete functionality. Those details or dependencies are normally for specialization and extensibility that is considered outside the main purpose of the object.

-

Example: POJO may assume inputs are valid but does not know validation rules.

10.3. Component

A component is a fully assembled set of code (one or more POJOs) that can perform its duties for its clients. A component will normally have a well-defined interface and a well-defined set of functions it can perform.

A component can have zero or more dependencies on other components, but there should be no further mandatory assembly once your client code gains access to it.

10.4. Bean

A generalized term that tends to refer to an object in the range of a POJO to a component that encapsulates something. A supplied "bean" takes care of aspects that we do not need to have knowledge of.

In Spring, the objects that form the backbone of your application and that are managed by the Spring IoC container are called beans. A bean is an object that is instantiated, assembled, and managed by a Spring IoC container. Otherwise, a bean is simply one of many objects in your application. Beans, and the dependencies among them, are reflected in the configuration metadata used by a container. [4]

Introduction to the Spring IoC Container and Beans

| You will find that I commonly use the term "component" in the lecture notes — to be a bean that is fully assembled and managed by the container. |

10.5. Container

A container is the assembler and manager of components.

Both Docker and Spring are two popular containers that work at two different levels but share the same core responsibility.

10.5.1. Docker Container Definition

-

Docker supplies a container that assembles and packages software so that it can be generically executed on remote platforms.

A container is a standard unit of software that packages up code and all its dependencies, so the application runs quickly and reliably from one computing environment to another. [5]

Use containers to Build Share and Run your applications

10.5.2. Spring Container Definition

-

Spring supplies a container that assembles and packages software to run within a JVM.

(The container) is responsible for instantiating, configuring, and assembling the beans. The container gets its instructions on what objects to instantiate, configure, and assemble by reading configuration metadata. The configuration metadata is represented in XML, Java annotations, or Java code. It lets you express the objects that compose your application and the rich interdependencies between those objects. [6]

Container Overview

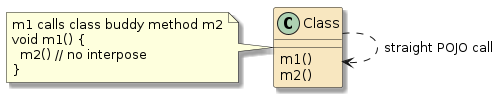

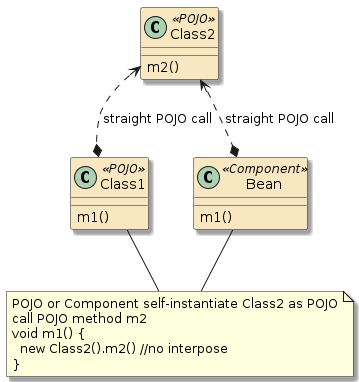

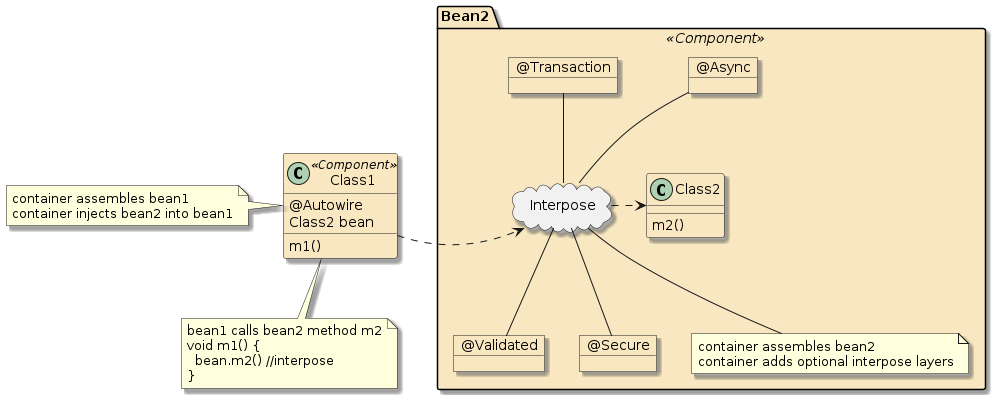

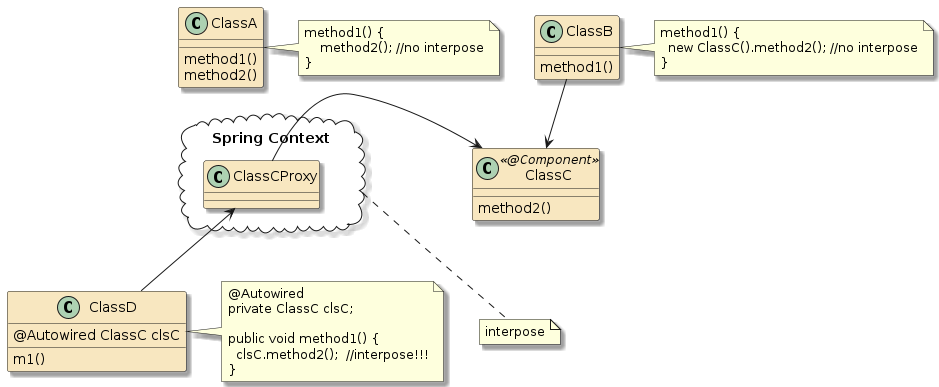

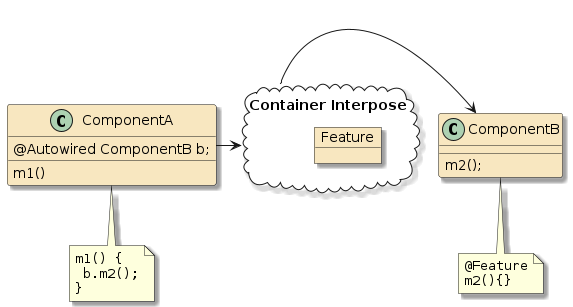

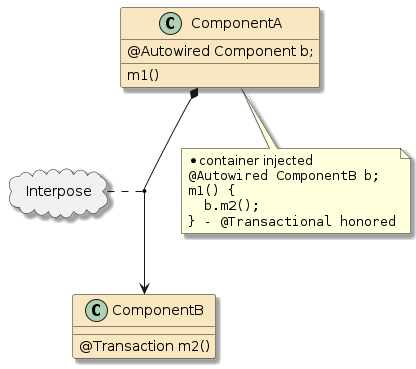

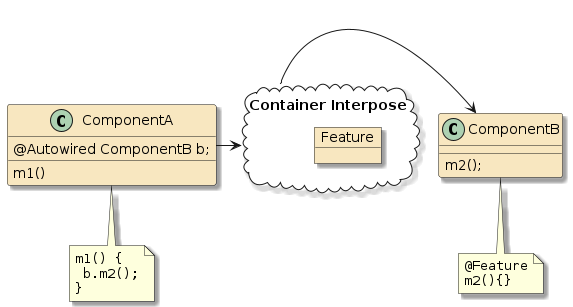

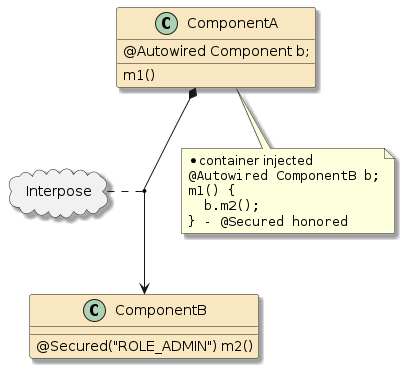

10.6. Interpose

(Framework/Spring) Containers do more than just configure and assemble simple POJOs. Containers can apply layers of functionality onto beans when wrapping them into components. Examples:

-

Perform validation

-

Enforce security constraints

-

Manage transaction for backend resource

-

Perform method in a separate thread

11. Language Impact on Frameworks

As stated earlier, frameworks provide a template of behavior — allowing for configuration and specialization. Over the years, the ability to configure and to specialize has gone through significant changes with language support.

11.1. XML Configurations

Prior to Java 5, the primary way to identify components was with an XML file. The XML file would identify a bean class provided by the framework user. The bean class would either implement an interface or comply with JavaBean getter/setter conventions.

11.1.1. Inheritance

Early JavaEE EJB defined a set of interfaces that represented things like stateless and stateful sessions and persistent entity classes. End-users would implement the interface to supply specializations for the framework. These interfaces had many callbacks that were commonly not needed but had to be tediously implemented with noop return statements — which produced some code bloat. Java interfaces did not support default implementations at that time.

11.1.2. Java Reflection

Early Spring bean definitions used some interface implementation, but more heavily leveraged compliance to JavaBean setter/getter behavior and Java reflection. Bean classes listed in the XML were scanned for methods that started with "set" or "get" (or anything else specially configured) and would form a call to them using Java reflection. This eliminated much of the need for strict interfaces and noop boilerplate return code.

11.2. Annotations

By the time Java 5 and annotations arrived in 2005 (late 2004), the Java framework worlds were drowning in XML. During that early time, everything was required to be defined. There were no defaults.

Although changes did not seem immediate, JavaEE frameworks like EJB 3.0/JPA 1.0 provided a substantial example for the framework communities in 2006. They introduced "sane" defaults and a primary (XML) and secondary (annotation) override system to give full choice and override of how to configure. Many things just worked right out of the box and only required a minor set of annotations to customize.

Spring went a step further and created a Java Configuration capability to be a 100% replacement for the old XML configurations. XML files were replaced by Java classes. XML bean definitions were replaced by annotated factory methods. Bean construction and injection was replaced by instantiation and setter calls within the factory methods.

Both JavaEE and Spring supported class level annotations for components that were very simple to instantiate and followed standard injection rules.

11.3. Lambdas

Java 8 brought in lambdas and functional processing, which from a strictly syntactical viewpoint is primarily a shorthand for writing an implementation to an interface (or abstract class) with only one abstract method.

You will find many instances in modern libraries where a call will accept a lambda function to implement core business functionality within the scope of the called method. Although — as stated — this is primarily syntactical sugar, it has made method definitions so simple that many more calls take optional lambdas to provide convenient extensions.

12. Key Frameworks

In this section, I am going to list a limited set of key Java framework highlights. In following the primarily Java path for enterprise frameworks, you will see a remarkable change over the years.

12.1. CGI Scripts

The Common Gateway Interface (CGI) was the cornerstone web framework when Java started coming onto the scene. [7] CGI was created in 1993 and, for the most part, was a framework for accepting HTTP calls, serving up static content and calling scripts to return dynamic content results. [8]

The important part to remember is that CGI was 100% stateless relative to backend resources. Each dynamic script called was a new, heavyweight operating system process and new connection to the database. Java programs were shoehorned into this framework as scripts.

12.2. Jakarta EE

Jakarta EE, formerly the Java Platform, Enterprise Edition (JavaEE) and Java 2 Platform, Enterprise Edition (J2EE) is a framework that extends the Java Platform, Standard Edition (Java SE) to be an end-to-end Web to database functionality and more. [9] Focusing only on the web and database portions here, JakartaEE provided a means to invoke dynamic scripts — written in Java — within a process thread and cached database connections.

The initial versions of Jakarta EE aimed big. Everything was a large problem and nothing could be done simply. It was viewed as being overly complex for most users. Spring was formed initially as a means to make J2EE simpler and ended up soon being an independent framework of its own.

J2EE first was released in 1999 and guided by Sun Microsystems. The Servlet portion was likely the most successful portion of the early release. The Enterprise Java Beans (EJB) portion was not realistically usable until JavaEE 5 / post 2006. By then, frameworks like Spring had taken hold of the target community.

In 2010, Sun Microsystems and control of both JavaSE and JavaEE was purchased by Oracle and seemed to progress but on a slow path. By JavaEE 8 in 2017, the framework had become very Spring-like with its POJO-based design. In 2017, Oracle transferred ownership of JavaEE to Jakarta. The JavaEE framework and libraries paused for a few years for naming changes and compatibility releases. [9]

12.3. Spring

Spring 1.0 was released in 2004 and was an offshoot of a book written by Rod Johnson "Expert One-on-One J2EE Design and Development" that was originally meant to explain how to be successful with J2EE. [10]

In a nutshell, Rod Johnson and the other designers of Spring thought that rather than starting with a large architecture like J2EE, one should start with a simple bean and scale up from there without boundaries. Small Spring applications were quickly achieved and gave birth to other frameworks like the Hibernate persistence framework (first released in 2003) which significantly influenced the EJB3/JPA standard. [11]

Spring and Spring Boot use many JavaEE(javax)/Jakarta libraries.

Spring 6 / Spring Boot 3 updated to Jakarta Maven artifact versions that renamed classes/properties to the jakarta package naming.

12.4. Jakarta Persistence API (JPA)

The Jakarta Persistence API (JPA), formerly the Java Persistence API, was developed as a part of the JavaEE community and provided a framework definition for persisting objects in a relational database. JPA fully replaced the original EJB Entity Beans standards of earlier releases. It has an API, provider, and user extensions. [12] The main providers of JPA were EclipseLink (formerly TopLink from Oracle) and Hibernate.

|

Frameworks should be based on the skeletons of successful implementations

Early EJB Entity Bean standards (< 3) were not thought to have been based on successful implementations.

The persistence framework failed to deliver, was modified with each major release, and eventually replaced by something that formed from industry successes.

|

JPA has been a wildly productive API. It provides simple API access and many extension points for DB/SQL-aware developers to supply more efficient implementations. JPA’s primary downside is likely that it allows Java developers to develop persistent objects without thinking of database concerns first. One could hardly blame that on the framework.

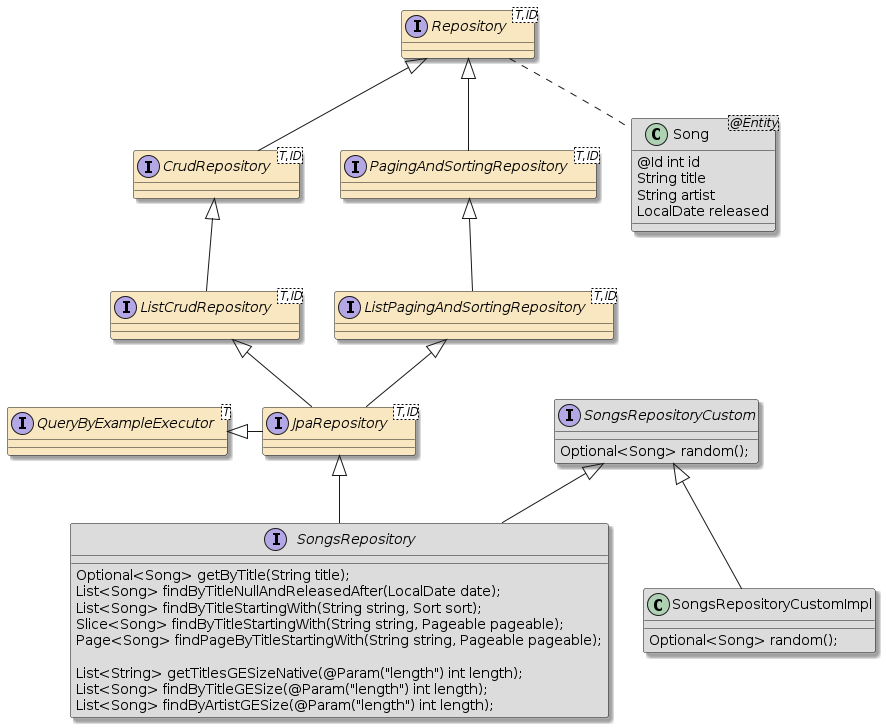

12.5. Spring Data

Spring Data is a data access framework centered around a core data object and its primary key — which is very synergistic with Domain-Driven Design (DDD) Aggregate and Repository concepts. [13]

-

Persistence models like JPA allow relationships to be defined to infinity and beyond.

-

In DDD, the persisted object has a firm boundary and only IDs are allowed to be expressed when crossing those boundaries.

-

These DDD boundary concepts are very consistent with the development of microservices — where large transactional monoliths are broken down into eventually consistent smaller services.

By limiting the scope of the data object relationships, Spring has been able to automatically define an extensive CRUD (Create, Read, Update, and Delete), query, and extension framework for persisted objects on multiple storage mechanisms.

We will be working with Spring Data JPA and Spring Data Mongo in this class. With the bounding DDD concepts, the two frameworks have an amazing amount of API synergy between them.

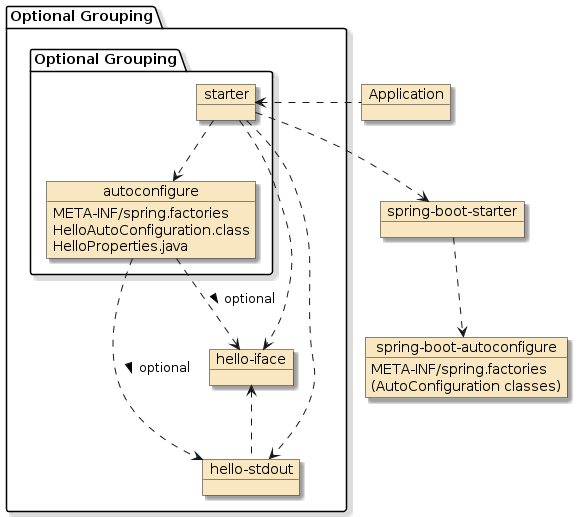

12.6. Spring Boot

Spring Boot was first released in 2014. Rather than take the "build anything you want, any way you want" approach in Spring, Spring Boot provides a framework for providing an opinionated view of how to build applications. [14]

-

By adding a dependency, a default implementation is added with "sane" defaults.

-

By setting a few properties, defaults are customized to your desired settings.

-

By defining a few beans, you can override the default implementations with local choices.

There is no external container in Spring Boot. Everything gets boiled down to an executable JAR and launched by a simple Java main (and a lot of other intelligent code).

Our focus will be on Spring Boot, Spring, and lower-level Spring and external frameworks.

13. Summary

In this module we:

-

identified the key differences between a library and framework

-

identify the purpose for a framework in solving an application solution

-

identify the key concepts that enable a framework

-

identify specific constructs that have enabled the advance of frameworks

-

identify key Java frameworks that have evolved over the years

Pure Java Main Application

copyright © 2026 jim stafford (jim.stafford@jhu.edu)

14. Introduction

This material provides an introduction to building a bare bones Java application using a single, simple Java class, packaging that in a Java ARchive (JAR), and executing it two ways:

-

as a class in the classpath

-

as the Main-Class of a JAR

14.2. Objectives

At the conclusion of this lecture and related exercises, the student will be able to:

-

create source code for an executable Java class

-

add that Java class to a Maven module

-

build the module using a Maven pom.xml

-

execute the application using a classpath

-

configure the application as an executable JAR

-

execute an application packaged as an executable JAR

15. Simple Java Class with a Main

Our simple Java application starts with a public class with a static main() method that optionally accepts command-line arguments from the caller

package info.ejava.examples.app.build.javamain;

import java.util.List;

public class SimpleMainApp { (1)

public static void main(String...args) { (2) (3)

System.out.println("Hello " + List.of(args));

}

}| 1 | public class |

| 2 | implements a static main() method |

| 3 | optionally accepts arguments |

16. Project Source Tree

This class is placed within a module source tree in the

src/main/java directory below a set of additional directories (info/ejava/examples/app/build/javamain)

that match the Java package name of the class (info.ejava.examples.app.build.javamain)

|-- pom.xml (1)

`-- src

|-- main (2)

| |-- java

| | `-- info

| | `-- ejava

| | `-- examples

| | `-- app

| | `-- build

| | `-- javamain

| | `-- SimpleMainApp.java

| `-- resources (3)

`-- test (4)

|-- java

`-- resources| 1 | pom.xml will define our project artifact and how to build it |

| 2 | src/main will contain the pre-built, source form of our artifacts that will be part of our primary JAR output for the module |

| 3 | src/main/resources is commonly used for property files or other resource files

read in during the program execution |

| 4 | src/test will contain the pre-built, source form of our test artifacts. These will not be part of the

primary JAR output for the module |

17. Building the Java Archive (JAR) with Maven

In setting up the build within Maven, I am going to limit the focus to just compiling our simple Java class and packaging that into a standard Java JAR.

17.1. Add Core pom.xml Document

Add the core document with required GAV information (groupId, artifactId, version) to the pom.xml

file at the root of the module tree. Packaging is also required but will have a default of jar if not supplied.

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>info.ejava.examples.app</groupId> (1)

<artifactId>java-app-example</artifactId> (2)

<version>6.1.1</version> (3)

<packaging>jar</packaging> (4)

<project>| 1 | groupId |

| 2 | artifactId |

| 3 | version |

| 4 | packaging |

|

Module directory should be the same name/spelling as artifactId to align with default directory naming patterns used by plugins. |

|

Packaging specification is optional in this case. The default packaging is |

17.2. Add Optional Elements to pom.xml

-

name

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>info.ejava.examples.app</groupId>

<artifactId>java-app-example</artifactId>

<version>6.1.1</version>

<packaging>jar</packaging>

<name>App::Build::Java Main Example</name> (1)

<project>| 1 | name appears in Maven build output but not required |

17.3. Define Plugin Versions

Define plugin versions so the module can be deterministically built in multiple environments

-

Each version of Maven has a set of default plugins and plugin versions

-

Each plugin version may or may not have a set of defaults (e.g., not Java 21) that are compatible with our module

<properties>

<java.target.version>21</java.target.version>

<maven-compiler-plugin.version>3.14.0</maven-compiler-plugin.version>

<maven-jar-plugin.version>3.4.2</maven-jar-plugin.version>

</properties>

<pluginManagement>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>${maven-compiler-plugin.version}</version>

<configuration>

<release>${java.target.version}</release>

</configuration>

</plugin>

</plugins>

</pluginManagement>The jar packaging will automatically activate the maven-compiler-plugin and maven-jar-plugin.

Our definition above identifies the version of the plugin to be used (if used) and any desired

configuration of the plugin(s).

17.4. pluginManagement vs. plugins

-

Use

pluginManagementto define a plugin if it activated in the module build-

useful to promote consistency in multi-module builds

-

commonly seen in parent modules

-

-

Use

pluginsto declare that a plugin be active in the module build-

ideally only used by child modules

-

our child module indirectly activated several plugins by using the

jarpackaging type

-

18. Build the Module

Maven modules are commonly built with the following commands/ phases

-

cleanremoves previously built artifacts -

packagecreates primary artifact(s) (e.g., JAR)-

processes main and test resources

-

compiles main and test classes

-

runs unit tests

-

builds the archive

-

[INFO] Scanning for projects...

[INFO]

[INFO] --------------< info.ejava.examples.app:java-app-example >--------------

[INFO] Building App::Build::Java App Example 6.1.1

[INFO] from pom.xml

[INFO] --------------------------------[ jar ]---------------------------------

[INFO]

[INFO] --- maven-clean-plugin:3.4.0:clean (default-clean) @ java-app-example ---

[INFO] Deleting .../java-app-example/target

[INFO]

[INFO] --- maven-resources-plugin:3.3.1:resources (default-resources) @ java-app-example ---

[INFO] Copying 0 resource from src/main/resources to target/classes

[INFO]

[INFO] --- maven-compiler-plugin:3.13.0:compile (default-compile) @ java-app-example ---

[INFO] Recompiling the module because of changed source code.

[INFO] Compiling 1 source file with javac [debug parameters release 17] to target/classes

[INFO]

[INFO] --- maven-resources-plugin:3.3.1:testResources (default-testResources) @ java-app-example ---

[INFO] Copying 0 resource from src/test/resources to target/test-classes

[INFO]

[INFO] --- maven-compiler-plugin:3.13.0:testCompile (default-testCompile) @ java-app-example ---

[INFO] Recompiling the module because of changed dependency.

[INFO]

[INFO] --- maven-surefire-plugin:3.3.1:test (default-test) @ java-app-example ---

[INFO]

[INFO] --- maven-jar-plugin:3.4.2:jar (default-jar) @ java-app-example ---

[INFO] Building jar: .../java-app-example/target/java-app-example-6.1.1.jar

[INFO]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 1.783 s19. Project Build Tree

The produced build tree from mvn clean package contains the following key artifacts (and more)

|-- pom.xml

|-- src

`-- target

|-- classes (1)

| `-- info

| `-- ejava

| `-- examples

| `-- app

| `-- build

| `-- javamain

| `-- SimpleMainApp.class

...

|-- java-app-example-6.1.1.jar (2)

...

`-- test-classes (3)| 1 | target/classes for built artifacts from src/main |

| 2 | primary artifact(s) (e.g., Java Archive (JAR)) |

| 3 | target/test-classes for built artifacts from src/test |

20. Resulting Java Archive (JAR)

Maven adds a few extra files to the META-INF directory that we can ignore. The key files we want to focus on are:

-

SimpleMainApp.classis the compiled version of our application -

META-INF/MANIFEST.MF contains properties relevant to the archive

$ jar tf target/java-app-example-*-SNAPSHOT.jar | egrep -v "/$" | sort

META-INF/MANIFEST.MF

META-INF/maven/info.ejava.examples.app/java-app-example/pom.properties

META-INF/maven/info.ejava.examples.app/java-app-example/pom.xml

info/ejava/examples/app/build/javamain/SimpleMainApp.class

|

21. Execute the Application

The application is executed by

-

invoking the

javacommand -

adding the JAR file (and any other dependencies) to the classpath

-

specifying the fully qualified class name of the class that contains our main() method

$ java -cp target/java-app-example-*-SNAPSHOT.jar info.ejava.examples.app.build.javamain.SimpleMainApp

Output:

Hello []$ java -cp target/java-app-example-*-SNAPSHOT.jar info.ejava.examples.app.build.javamain.SimpleMainApp arg1 arg2 "arg3 and 4"

Output:

Hello [arg1, arg2, arg3 and 4]-

example passed three (3) arguments separated by spaces

-

third argument (

arg3 and arg4) used quotes around the entire string to escape spaces and have them included in the single parameter

-

22. Configure Application as an Executable JAR

To execute a specific Java class within a classpath is conceptually simple. However, there is a lot more to know than we need to when there may be only a single entry point. In the following sections we will assign a default Main-Class by using the MANIFEST.MF properties

22.1. Add Main-Class property to MANIFEST.MF

$ unzip -qc target/java-app-example-*-SNAPSHOT.jar META-INF/MANIFEST.MF

Manifest-Version: 1.0

Created-By: Maven JAR Plugin 3.4.2

Build-Jdk-Spec: 21

Main-Class: info.ejava.examples.app.build.javamain.SimpleMainApp22.2. Automate Additions to MANIFEST.MF using Maven

One way to surgically add that property is through the maven-jar-plugin

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-jar-plugin</artifactId>

<version>${maven-jar-plugin.version}</version>

<configuration>

<archive>

<manifest>

<mainClass>info.ejava.examples.app.build.javamain.SimpleMainApp</mainClass>

</manifest>

</archive>

</configuration>

</plugin>|

This is a very specific plugin configuration that would only apply to a specific child module.

Therefore, we would place this in a |

23. Execute the JAR versus just adding to classpath

The executable JAR is executed by

-

invoking the

javacommand -

adding the -jar option

-

adding the JAR file (and any other dependencies) to the classpath

$ java -jar target/java-app-example-*-SNAPSHOT.jar

Output:

Hello []$ java -jar target/java-app-example-*-SNAPSHOT.jar one two "three and four"

Output:

Hello [one, two, three and four]-

example passed three (3) arguments separated by spaces

-

third argument (

three and four) used quotes around the entire string to escape spaces and have them included in the single parameter

-

24. Configure pom.xml to Test

At this point, we are ready to create an automated execution of our JAR as a part of the build.

We have to do that after the packaging phase and will leverage the integration-test

Maven phase

<build>

...

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-antrun-plugin</artifactId> (1)

<executions>

<execution>

<id>execute-jar</id>

<phase>integration-test</phase> (4)

<goals>

<goal>run</goal>

</goals>

<configuration>

<tasks>

<java fork="true" failonerror="true" classname="info.ejava.examples.app.build.javamain.SimpleMainApp"> (2)

<classpath>

<pathelement path="${project.build.directory}/${project.build.finalName}.jar"/>

</classpath>

<arg value="Ant-supplied java -cp"/>

<arg value="Command Line"/>

<arg value="args"/>

</java>

<java fork="true" failonerror="true"

jar="${project.build.directory}/${project.build.finalName}.jar"> (3)

<arg value="Ant-supplied java -jar"/>

<arg value="Command Line"/>

<arg value="args"/>

</java>

</tasks>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>| 1 | Using the maven-ant-run plugin to execute Ant task |

| 2 | Using the java Ant task to execute shell java -cp command line |

| 3 | Using the java Ant task to execute shell java -jar command line |

| 4 | Running the plugin during the integration-phase

|

24.1. Execute JAR as part of the build

$ mvn clean verify

[INFO] Scanning for projects...

[INFO]

[INFO] -------------< info.ejava.examples.app:java-app-example >--------------

...

[INFO] --- maven-jar-plugin:3.4.2:jar (default-jar) @ java-app-example -(1)

[INFO] Building jar: .../java-app-example/target/java-app-example-6.1.1.jar

[INFO]

...

[INFO] --- maven-antrun-plugin:3.1.0:run (execute-jar) @ java-app-example ---

[INFO] Executing tasks (2)

[INFO] [java] Hello [Ant-supplied java -cp, Command Line, args]

[INFO] [java] Hello [Ant-supplied java -jar, Command Line, args]

[INFO] Executed tasks

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS| 1 | Our plugin is executing |

| 2 | Our application was executed and the results displayed |

25. Summary

-

The JVM will execute the static

main()method of the class specified in the java command -

The class must be in the JVM classpath

-

Maven can be used to build a JAR with classes

-

A JAR can be the subject of a java execution

-

The Java

META-INF/MANIFEST.MFMain-Classproperty within the target JAR can express the class with themain()method to execute -

The maven-jar-plugin can be used to add properties to the

META-INF/MANIFEST.MFfile -

A Maven build can be configured to execute a JAR

Simple Spring Boot Application

copyright © 2026 jim stafford (jim.stafford@jhu.edu)

26. Introduction

This material makes the transition from creating and executing a simple Java main application to a Spring Boot application.

26.2. Objectives

At the conclusion of this lecture and related exercises, the student will be able to:

-

extend the standard Maven

jarmodule packaging type to include core Spring Boot dependencies -

construct a basic Spring Boot application

-

build and execute an executable Spring Boot JAR

-

define a simple Spring component and inject that into the Spring Boot application

27. Spring Boot Maven Dependencies

Spring Boot provides a spring-boot-starter-parent

(gradle source,

pom.xml) pom that can be used as a parent pom for our Spring Boot modules.

[15]

This defines version information for dependencies and plugins for building Spring Boot artifacts — along with an opinionated view of how the module should be built.

spring-boot-starter-parent inherits from a spring-boot-dependencies

(gradle source,

pom.xml)

pom that provides a definition of artifact versions without an opinionated view of how the module is built.

This pom can be imported by modules that already inherit from a local Maven parent — which would be common.

This is the demonstrated approach we will take here. We will also include demonstration of how the build constructs are commonly spread across parent and local poms.

| Spring Boot has converted over to gradle and posts a pom version of the gradle artifact to Maven central repository as a part of their build process. |

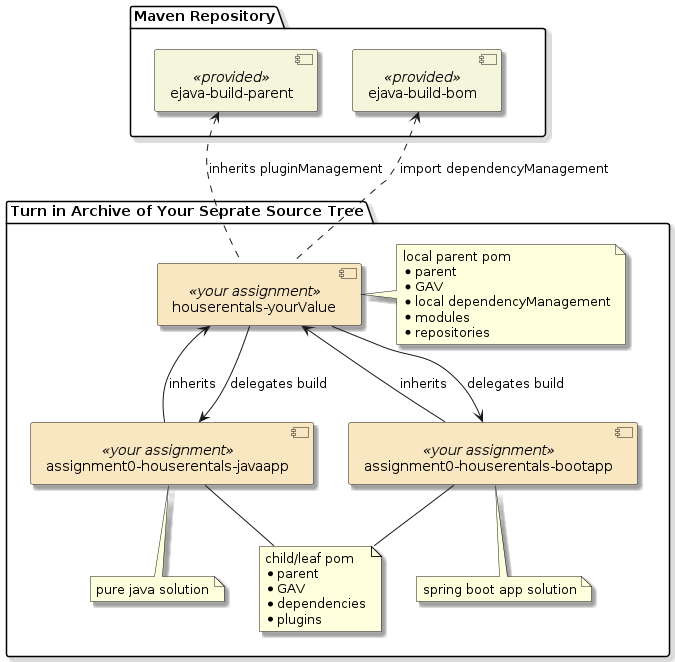

28. Parent POM

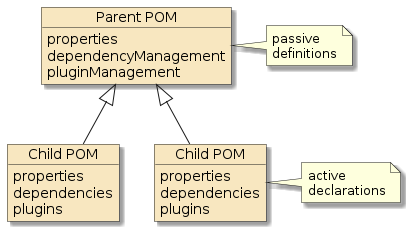

We are likely to create multiple Spring Boot modules and would be well-advised to begin by creating a local parent pom construct to house the common passive definitions. By passive definitions (versus active declarations), I mean definitions for the child poms to use if needed versus mandated declarations for each child module. For example, a parent pom may define the JDBC driver to use when needed, but not all child modules will need a JDBC driver nor a database for that matter. In that case, we do not want the parent pom to actively declare a dependency. We just want the parent to passively define the dependency that the child can optionally choose to actively declare. This construct promotes consistency among all the modules.

|

"Root"/parent poms should define dependencies and plugins for consistent re-use among child poms and use dependencyManagement and pluginManagement elements to do so. |

|

"Child"/concrete/leaf poms declare dependencies and plugins to be used when building that module and try to keep dependencies to a minimum. |

|

"Prototype" poms are a blend of root and child pom concepts. They are a nearly-concrete, parent pom that can be extended by child poms but actively declare a select set of dependencies and plugins to allow child poms to be as terse as possible. |

28.1. Define Version for Spring Boot artifacts

I am using a technique below of defining the value in a property so that it is easy to locate and change as well as re-use elsewhere if necessary.

# Place this declaration in an inherited parent pom

<properties>

<springboot.version>3.5.5</springboot.version> (1)

</properties>| 1 | default value has been declared in imported ejava-build-bom |

|

Property values can be overruled at build time by supplying a system property on the command line "-D(name)=(value)" |

28.2. Import springboot-dependencies-plugin

Import springboot-dependencies-plugin. This will define dependencyManagement

for us for many artifacts that are relevant to our Spring Boot development.

# Place this declaration in an inherited parent pom

<dependencyManagement> (1)

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-dependencies</artifactId>

<version>${springboot.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>| 1 | import is within examples-root for class examples, which is a grandparent of this example |

29. Local Child/Leaf Module POM

The local child module pom.xml is where the module is physically built. Although Maven modules can have multiple levels of inheritance — where each level is a child of their parent — the child module I am referring to here is the leaf module where the artifacts are meant to be really built. Everything defined above it is primarily used as a common definition (through dependencyManagement and pluginManagement) to simplify the child pom.xml and to promote consistency among sibling modules. It is the job of the leaf module to activate these definitions that are appropriate for the type of module being built.

29.2. Declare dependency on artifacts used

Realize the parent definition of the spring-boot-starter dependency by declaring

it within the child dependencies section.

For where we are in this introduction, only the above dependency will be necessary.

The imported spring-boot-dependencies will take care of declaring the version#

# Place this declaration in the child/leaf pom building the JAR archive

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter</artifactId>

<!--version --> (1)

</dependency>

</dependencies>| 1 | parent has defined (using import in this case) the version for all children to consistently use |

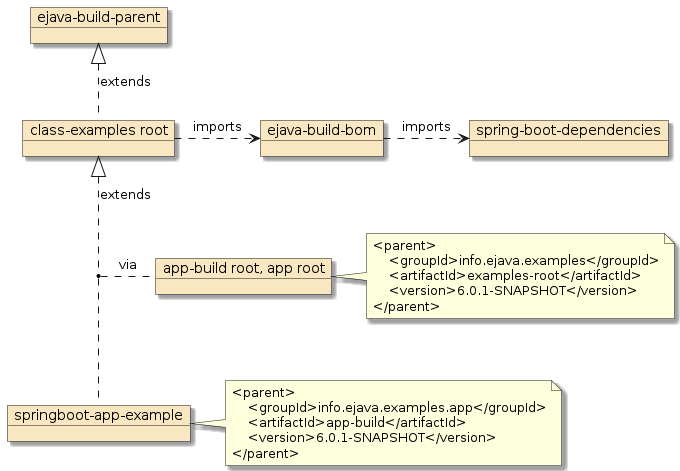

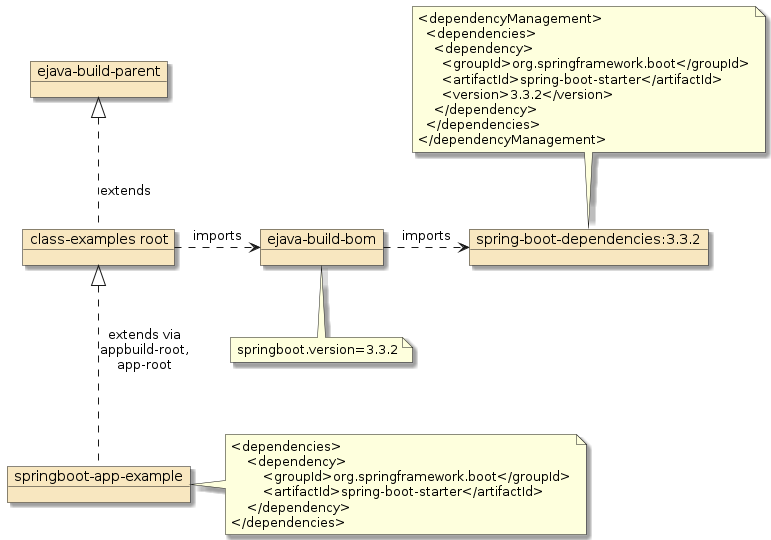

The figure below shows the parent poms being the source of the passive dependency definitions and the child being the source of the active dependency declarations.

-

the parent is responsible for defining the version# for dependencies used

-

the child is responsible for declaring what dependencies are needed and adopts the parent version definition

An upgrade to a future dependency version should not require a change of a child module declaration if this pattern is followed.

30. Simple Spring Boot Application Java Class

With the necessary dependencies added to our build classpath, we now have enough to begin defining a simple Spring Boot Application.

package info.ejava.springboot.examples.app.build.springboot;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

@SpringBootApplication (3)

public class SpringBootApp {

public static void main(String... args) { (1)

System.out.println("Running SpringApplication");

SpringApplication.run(SpringBootApp.class, args); (2)

System.out.println("Done SpringApplication");

}

}| 1 | Define a class with a static main() method |

| 2 | Initiate Spring application bootstrap by invoking SpringApplication.run() and passing a) application class and b) args passed into main() |

| 3 | Annotate the class with @SpringBootApplication |

| Startup can, of course, be customized (e.g., change the printed banner, registering event listeners) |

30.1. Module Source Tree

The source tree will look similar to our previous Java main example.

|-- pom.xml

`-- src

|-- main

| |-- java

| | `-- info

| | `-- ejava

| | `-- examples

| | `-- app

| | `-- build

| | `-- springboot

| | `-- SpringBootApp.java

| `-- resources

`-- test

|-- java

`-- resources31. Spring Boot Executable JAR

At this point, we can likely execute the Spring Boot Application within the IDE but instead, lets go back to the pom and construct a JAR file to be able to execute the application from the command line.

31.1. Building the Spring Boot Executable JAR

We saw earlier how we could build a standard executable JAR using the maven-jar-plugin.

However, there were some limitations to that approach — especially the fact that a standard Java JAR cannot house dependencies to form a self-contained classpath and Spring Boot will need additional JARs to complete the application bootstrap.

Spring Boot uses a custom executable JAR format that can be built with the aid of the

spring-boot-maven-plugin.

Let’s extend our pom.xml file to enhance the standard JAR to be a Spring Boot executable JAR.

31.1.1. Declare spring-boot-maven-plugin

The following snippet shows the configuration for a spring-boot-maven-plugin that defines a default execution to build the Spring Boot executable JAR for all child modules that declare using it.

In addition to building the Spring Boot executable JAR, we are setting up a standard in the parent for all children to have their follow-on JAR classified separately as a bootexec.

classifier is a core Maven construct and is meant to label sibling artifacts to the original Java JAR for the module.

Other types of classifiers are source, schema, javadoc, etc.

bootexec is a value we made up.

bootexec is a value we made up.

|

By default, the repackage goal would have replaced the Java JAR with the Spring Boot executable JAR.

That would have left an ambiguous JAR artifact in the repository — we would not easily know its JAR type.

This will help eliminate dependency errors during the semester when we layer N+1 assignments on top of layer N.

Only standard Java JARs can be used in classpath dependencies.

<properties>

<spring-boot.classifier>bootexec</spring-boot.classifier>

</properties>

...

<build>

<pluginManagement>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<configuration>

<classifier>${spring-boot.classifier}</classifier> (4)

</configuration>

<executions>

<execution>

<id>build-app</id> (1)

<phase>package</phase> (2)

<goals>

<goal>repackage</goal> (3)

</goals>

</execution>

</executions>

</plugin>

...

</plugins>

</pluginManagement>

</build>| 1 | id used to describe execution and required when having more than one |

| 2 | phase identifies the maven goal in which this plugin runs |

| 3 | repackage identifies the goal to execute within the spring-boot-maven-plugin |

| 4 | adds a -bootexec to the executable JAR’s name |

We can do much more with the spring-boot-maven-plugin on a per-module basis (e.g., run the application from within Maven).

We are just starting at construction at this point.

31.1.2. Build the JAR

$ mvn clean package

[INFO] Scanning for projects...

...

[INFO] --- maven-jar-plugin:3.4.2:jar (default-jar) @ springboot-app-example ---

[INFO] Building jar: .../target/springboot-app-example-6.1.1.jar (1)

[INFO]

[INFO] --- spring-boot-maven-plugin:3.5.5:repackage (build-app) @ springboot-app-example ---

[INFO] Attaching repackaged archive .../target/springboot-app-example-6.1.1-bootexec.jar with classifier bootexec (2)| 1 | standard Java JAR is built by the maven-jar-plugin |

| 2 | standard Java JAR is augmented by the spring-boot-maven-plugin |

31.2. Java MANIFEST.MF properties

The spring-boot-maven-plugin augmented the standard JAR by adding a few properties to the MANIFEST.MF file

$ unzip -qc target/springboot-app-example-6.1.1-bootexec.jar META-INF/MANIFEST.MF

Manifest-Version: 1.0

Created-By: Maven JAR Plugin 3.2.2

Build-Jdk-Spec: 21

Main-Class: org.springframework.boot.loader.launch.JarLauncher (1)

Start-Class: info.ejava.examples.app.build.springboot.SpringBootApp (2)

Spring-Boot-Version: 3.5.5

Spring-Boot-Classes: BOOT-INF/classes/

Spring-Boot-Lib: BOOT-INF/lib/

Spring-Boot-Classpath-Index: BOOT-INF/classpath.idx

Spring-Boot-Layers-Index: BOOT-INF/layers.idx| 1 | Main-Class was set to a Spring Boot launcher |

| 2 | Start-Class was set to the class we defined with @SpringBootApplication |

31.3. JAR size

Notice that the size of the Spring Boot executable JAR is significantly larger than the original standard JAR.

$ ls -lh target/*jar* | grep -v sources | cut -d\ -f9-99

10M Aug 28 15:19 target/springboot-app-example-6.1.1-bootexec.jar (2)

4.3K Aug 28 15:19 target/springboot-app-example-6.1.1.jar (1)| 1 | The original Java JAR with Spring Boot annotations was 4.3KB |

| 2 | The Spring Boot JAR is 10MB |

31.4. JAR Contents

Unlike WARs, a standard Java JAR does not provide a way to embed dependency JARs. Common approaches to embed dependencies within a single JAR include a "shaded" JAR where all dependency JAR are unwound and packaged as a single "uber" JAR

-

positives

-

works

-

follows standard Java JAR constructs

-

-

negatives

-

obscures contents of the application

-

problem if multiple source JARs use files with same path/name

-

Spring Boot creates a custom WAR-like structure

BOOT-INF/classes/info/ejava/examples/app/build/springboot/AppCommand.class

BOOT-INF/classes/info/ejava/examples/app/build/springboot/SpringBootApp.class (3)

BOOT-INF/lib/javax.annotation-api-2.1.1.jar (2)

...

BOOT-INF/lib/spring-boot-3.5.5.jar

BOOT-INF/lib/spring-context-6.2.10.jar

BOOT-INF/lib/spring-beans-6.2.10.jar

BOOT-INF/lib/spring-core-6.2.10.jar

...

META-INF/MANIFEST.MF

META-INF/maven/info.ejava.examples.app/springboot-app-example/pom.properties

META-INF/maven/info.ejava.examples.app/springboot-app-example/pom.xml

org/springframework/boot/loader/launch/ExecutableArchiveLauncher.class

org/springframework/boot/loader/launch/JarLauncher.class (1)

...

org/springframework/boot/loader/util/SystemPropertyUtils.class| 1 | Spring Boot loader classes hosted at the root / |

| 2 | Local application classes hosted in /BOOT-INF/classes |

| 3 | Dependency JARs hosted in /BOOT-INF/lib |